Cropnet

As the name suggests, this project involved training a model to crop out documents from a background. Essentially this can be classified as a segmentation task that would need massive annotation of data with a mask on the foreground object which is to be used for supervised segmentation training.

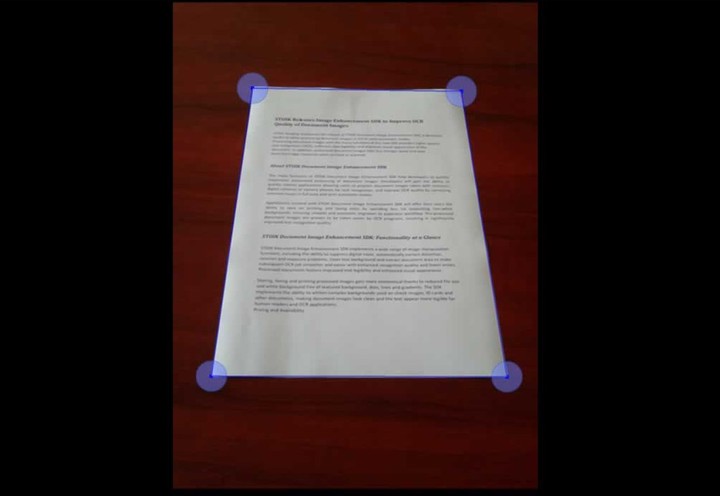

We decided to cast this into a regression based problem where we annotated only the four corner points of the foreground object as our training labels and then used them to train a regression model with 8 continuous valued outputs({x,y} coordinates of all four corners). Once we had these points we implemented a perspective transform to warp the object into a rectangular space for the final cropped output.

For training we used custom aggregated and crowdsourced dataset of ID cards and other documents in various background settings. We implemented our own annotation tool for the above mentioned ground truth annotation. In order to ensure variance, we used both natural camera taken images as well as synthetically generated data by superimposing the already available cropped samples on random backgrounds at different positions, scales and orientation.

Not only this, we also implemented massive data augmentation in order to further multiply our training data that worked simultaneously on the image and the annotated keypoints. Some of the augmentation techniques used were blurring, rotation, scaling,grayscale, color adjustments, dropout, adding noise etc. We used the imgaug library for the whole augmentation pipeline.

Annotation, synthetic data generation, and augmentation were all done in such a way as to ensure the sequence of the four corner points with respect to the object remained same in order to ensure spatial and rotational invariance during training and prediction. The upper left point of the foreground object was always the first label followed by others in a clockwise manner.

Once we had sufficient annotated and augmented data, we trained the regression models. We experimented and benchmarked a number of different algorithms and learning paradigms. We benchmarked ResNet , Squeezenet , VGGNet , Shufflenet in both transfer learning and from scratch settings for a large range of hyperparameter values and benchmarked their performances.

The outputs, as mentioned above were eight continuous labels, hence the final layer was always a linear activation layer. The loss functions used were variations of Mean Squared Error values. This whole concept was applied and tested on a number of applications such as cropping ID cards from background for further processing in an Optical Character Recognition system , cropping Cheque MICR stub for MICR extraction for digital processing, Passport MRZ code extraction, scanned document layout detection etc and they all fit in perfectly in the overall pipeline and gave robust performances.