Sign Language Classification [Bachelor Project]

This was our Undergrad Final Project where we set out to implement a speech Sign Language intercoversion system. More specifically it was Hindi speech- Indian sign Language interconversion system. The speech to sign language subsystem was essentially a derivative of our speech recognition project with detected speech being mapped to corresponding sign language visuals in real time. Here I shall be discussing our Indian Sign Language detection subsystem. Initially we just used a dataset of 7000 2D images of Indian sign language for classification as a proof of concept, we used a modified VGGNet for classification with a 99% accuracy. But using 2D data was impracticable for building a real time and realistic sign language recognition system. To accommodate more complex backgrounds that we could come across in everyday situation instead of the simple backgrounds as in 2-D dataset and also to account for occlusion, various angles arising due to Indian Sign Language being two handed, we decided to use kinect sensor and hence RGB-D dataset to leverage the depth information rendered by Kinect.

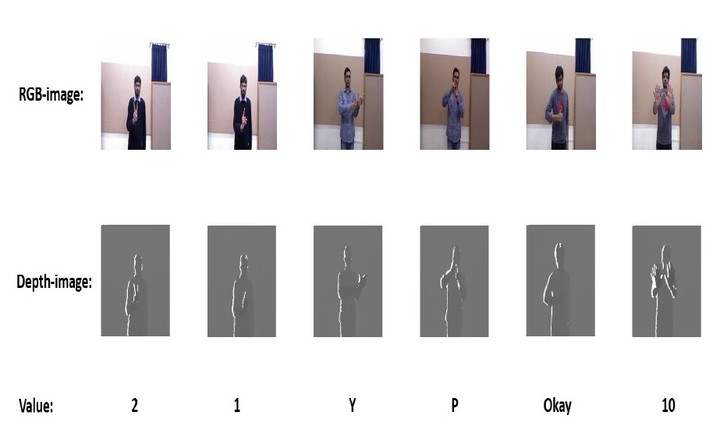

We collected RGB-D data for 48 different Indian Signs. These include both RGB and Depth images of digits, alphabets and a few common words. The dataset comprises of around 36 images per word in our vocabulary, contributed by 18 different people. We trained a Multivariate Gaussian Mixture Model(GMM) on the HSV pixel values of the data to segment skin region and intensify the skin pixel areas in the RGB-D images.

Since per class data was significantly small for training a robust model, we performed significant data segmentation(blurring,affine transforms,colour adjustments) to multiply the data before training. Once we had the data, we adopted two different paradigms. In the first method we stacked the RGB and Depth image vertically before passing them on to a ResNet-50 classifier for training. This method reached a validation accuracy of 71%.

The second approach involved using a Bilinear CNN system, with two parallel ResNet architectures for RGB and Depth images separately followed by bilinear pooling of features output by them before being passed on to subsequent Dense layers. This approach performed better with a validation accuracy of 79% although it was computationally more expensive. Finally we passed the output of the sign language detection system through Google’s text to speech(TTS) generation API for getting the final speech output.