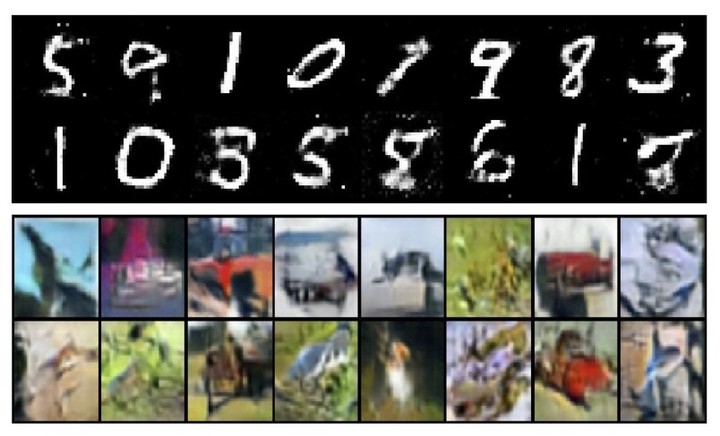

Random Images generated using GAN trained on MNIST(top) and CIFAR-10(bottom) datasets

Random Images generated using GAN trained on MNIST(top) and CIFAR-10(bottom) datasets

In this project, the final project for COMP551: Applied Machine Learning Course we study the 2014 published paper Generative Adversarial Networks . We have tried to reproduce a subset of the results obtained in the paper and performed ablation studies to understand the model’s robustness and evaluate the importance of the various model hyper-parameters. We also extended the model to include newer features in order to improve the model’s performance on the featured datasets, by making changes to the model’s internal structure, inspired by more recent works in the fi eld.

Generative Adversarial Networks (GANs) were first described in this paper and are based on the zero-sum non-cooperative game between a Discriminator (D) and a Generator(G), analysed thoroughly in the field of Game Theory . The framework where both D and G networks are multilayer perceptrons, is referred to as Adversarial Networks.

The provided code was implemented using the now obsolete Theano framework and using python2, hence it was really difficult to recon figure and get it setup on our system. Nevertheless we managed to hack the code and get it to execute for the task of reproducing the results on MNIST dataset but proceeded to use the much more interpretable and relevant pytorch implementation for ablation studies and extension of the model. The original paper trains the presented GAN network on the MNIST, CIFAR-10 and TFD images. However, the Toronto Faces Database (TFD) is not accessible without permission, and the provided code does not include scripts for it. Hence, we do not reproduce their results on the TFD database.

GANs have been known to be unstable to train, often resulting in generators that produce nonsensical outputs. We decided to put this notion to test by tuning some of the hyperparameters involved in training the models. As part of the ablation studies, we experimented with different values for

- Learning Rates: We tuned the learning rates of both Generator and Discriminator models.

- Loss Functions: We decided to experiment with the L2 norm or Mean Squared error loss function.

- D_steps: Number of steps to apply for the Discriminator, i.e the number of times the Discriminator is trained before updating the Generators. We changed it from 1 to 2 as part of our experiment.

As part of extensions of GAN we implemented two variants of GAN

- Deep Convolutional Generative Adversarial Networks or DCGAN are a variation of GAN where the vanilla GAN is upscaled using CNNs.

- Conditional Generative Adversarial Networks or cGAN which allows us to direct the generation process of the model by conditioning it on certain features, here, the class labels.