In this project, which was essentially an assignment in COMP558:Fundamentals of Computer Vision course, I implemented the Scale Invariant Feature Transform(SIFT) algorithm from scratch. SIFT is a traditional computer vision feature extraction technique. SIFT features are scale, space and rotationally invariant.

SIFT is a highly involved algorithm and thus implementing it from scratch is an arduous tasks. At an abstract level the SIFT algorithm can be described in five steps

-

Find Scale Space Extrema: We construct the Laplacian(Difference of Gaussian) pyramid for the given image and using this pyramid, we found local extremas in each level of the laplacian pyramid by taking a local area and comparing the intensities in that local region for the same scale as well as the adjacent(next and previous) levels in the pyramid. Two local neighbourhood sizes(33,55) were tried.

-

Keypoint Localization: A large number of keypoints are generated by the first step which might not be useful. Corner cases and low contrast keypoints are discarded. Also a threshold was specified in order to select only strong extremas. A taylor series expansion of scale space is done to get a more accurate value of extrema and those falling below the threshold were discarded.

-

Gradient Calculation: For each keypoint detected, a square neigborhood(17x17 in our case) was taken around them at their respective scales. Intensity gradients and orientation were calculated for the given neighborhood. A gaussian mask of the same size as our neighborhood was used as a weighting mask over gradient magnitude matrix.

-

SIFT Feature Descriptors: SIFT feature descriptors are created by taking histograms of gradients orientations for each keypoint neighborhoods. Orientations are divided into bins of various ranges(36 bins of 10 deg in our case), and for each gradient falling in a bin the gradient magnitude value is added to that particular bin. Once we have the histogram we find the orientation with the highest weighted value. Its the principle orientation and the desriptors(orientation vectors) are shifted counterclockwise such that principle orientation becomes the first bin. This lends SIFT features their rotational invariance.

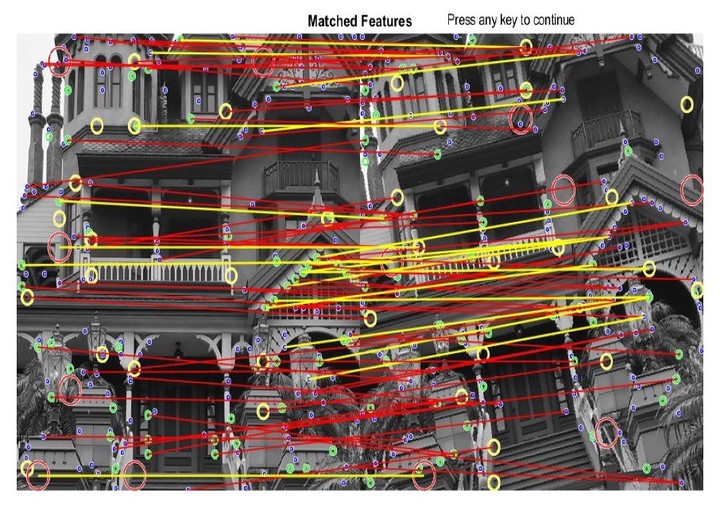

Once we had the SIFT desriptors, I transformed the image and calculated SIFT vectors for the original and transformed images and matched them using bruteforce algorithm i.e Bhattacharyya Distance and visualised(as in figure above) the matches above a certain threshold to test the robustness of the SIFT algorithm.