Online Learning of temporal Knowledge Graphs

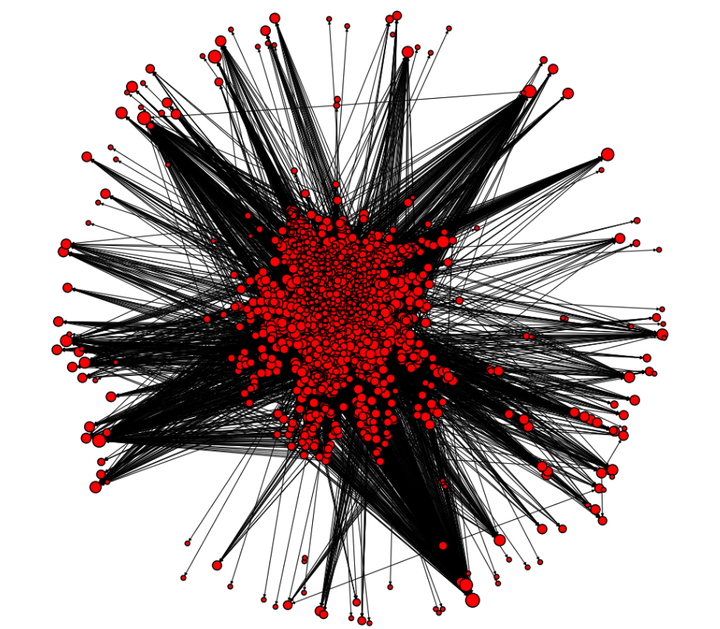

Visualization of the freebase graph

Visualization of the freebase graph

This project was undertaken as part of the final project for COMP 766: Graph Representation Learning course at McGill University.

For many computer science sub-fields, knowledge graphs (KG) remain a constant abstraction whose usefulness relies in their representation power. However, dynamic environments, such as the temporal streams of social media information, brings a greater necessity of incorporating additional structures to KG’s.

In this project, we applied currently available solutions to address incremental knowledge graph embedding to several applications to test their efficiency. We also proposed an embedding model agnostic framework to make these models incremental. Firstly, we proposed a window-based incremental learning approach that discards least happening facts and performs link prediction on updated triples. Next, we presented experiments on a GCN model-agnostic meta-learning based approach.

To create edge embedding vectors, we experimented with two methods:

- Concatenating head and tail’s 128-dimensional Node2Vec embedding vectors to create 256-dimensional edge embedding

- Subtracting head embedding from tail embedding vector to create 128-dimensional edge embedding vector Our best model is the Window-based KG Incremental Learning, where edge representations, are calculated from subtraction of embedding vectors of head and tail nodes

For the experiment, link prediction adjusted to a binary classification, with 0 and 1 representing link is present or absent respectively, was used, with Random-Forest model for training and prediction. Also, dataset is divided to training set and nine test sets as incremental updates, to generate 9 snapshots of graph with each snapshot, adding new nodes and updating edges compare to previous graph snapshot.

The second method we experimented with followed a model-agnostic meta-learning based approach with Graph Convolutional Networks(GCN). The idea here is to learn a GCN to predict the embeddings of new nodes given the old embeddings of its neighboring entities in the old graph and similarly obtain an updated representation of old entities based on the recently learned embedding of new entities. These two predictions are jointly iterated. This can be viewed as learning to learn problem (meta-learning).